AI security refers to adopting best practices so that the AI models and their data are protected from manipulation or unauthorized access. Through strong AI security, businesses can build trust and prevent data leaks or model exploitation.

Using AI chatbots in 2025? Enjoying operational efficiency? But what if your business reputation is on the line? The increased usage of AI chatbots brings new privacy and security risks.

Nowadays, most of the modern AI chatbots collect and store personal data during conversations. This data can be exposed if not secured properly. And your customers know this! Studies show that around 70% of users worry about how their personal data is stored and used by chatbots.

Thus, in 2025, if your business is not trying to protect chatbot data, be ready to experience high customer churn! Need solutions? Read this article to check out the latest 7 ways your business can achieve high AI security. But firstly, let’s learn how serious this threat is!

The Reality of AI Data Leaks in 2025!

AI-related breaches are surging, with one-third of enterprises suffering three or more breaches in just 12 months. Out of all large organizations studied, about 1 in 3 experienced at least three separate security breaches involving AI within a single year!

The worst part? When a company’s sensitive data is stolen or exposed, the financial damage is high. On average, each breach now costs $4.45 million worldwide. This includes multiple costs, like:

- Investigations

- Legal actions

- Loss of customers

- Damage to reputation.

But Why Do Leaks Happen?

AI systems are not limited to their training data. They also learn from new inputs, such as what users type into them or what is received through CRM systems or email platforms. Studies show that about 27.4% of data fed into chatbots is classified as “sensitive”. Now, this data can be leaked in various ways:

| How does data privacy in chatbots get compromised? | Explanation |

| Human Error |

|

| System Connections |

|

| Malicious Prompts |

|

Why are Generic Protections Not Enough?

Want to know the biggest AI security risk? It is company-specific data and information unique to your business, such as:

- Strategies

- Customer details

- Intellectual property

- Financial plans

Most AI providers add guardrails, such as blocking illegal requests or harmful language. These are useful but limited! Without specific security measures, an AI system could unintentionally leak this data and create serious business risks.

7 Ways Your Business Can Secure Customer Messaging in 2025!

Regulatory actions and fines connected to chatbot privacy failures have increased. Global laws like GDPR, CCPA, and sector-specific standards (HIPAA, LGPD, SOC 2) are now setting stringent requirements for chatbot operations.

But you can’t rely on laws alone. And most leaders don’t! About 32% of U.S. CX leaders cite data privacy in chatbots as the primary obstacle to chatbot adoption in enterprises. Does this mean you shouldn’t take advantage of the latest technology? Nope! Instead, you, as a VP or director of a D2C company earning $5M+ revenue, should try to secure customer messaging and set the highest standards of AI security. Below are seven major ways to do so:

1. Build Security Into AI From the Start

Security should not be added at the end. Instead, your developers must think about risks before they launch or use an AI tool. This approach is called a “Secure Development Lifecycle (SDLC)”.

For example,

- You should map out how hackers might try to misuse it (threat modeling).

- Check the code carefully (code reviews).

- Run tests to spot weaknesses (vulnerability testing).

By doing this, you reduce the chances of leaks or attacks before they happen.

2. Be Honest and Clear With Users!

If your AI tool or chatbot collects personal information, you must be upfront about it. Means? Tell people exactly:

- What data are you collecting?

- Why do you need it?

- How do you plan to use it?

Furthermore, you should always ask for their permission before storing or processing their details. For D2C companies, taking user consent and maintaining such high transparency leads to AI compliance + avoids legal risks.

Also, your customers feel safer as they know their data is handled openly and is not hidden behind unclear policies.

3. Follow Ethical Rules in AI

AI should not only be secure but also fair and responsible! This is where AI ethics guidelines come in. When your business follows them, it ensures your chatbot or AI tool:

- Treats users fairly

- Avoids bias

- Is accountable for its actions

For example, say your chatbot handles sensitive topics like health or finances. Now, you need rules to prevent misleading answers or misuse of private data.

This way, by following ethical standards, you can:

- Achieve AI compliance

- Protect your customers

- Strengthen your business reputation

4. Lock Data With Encryption

For those unaware, encryption is like putting your data in a “locked safe” before sending it across the internet. When customers share information with your chatbot, encryption ensures that only the intended recipient can read it.

To achieve high AI security, you can use advanced protocols such as TLS (Transport Layer Security). This technique makes it tough for hackers to intercept or steal the data while it is being transmitted between the user and your system.

5. Control Access With Authentication

Not everyone should have the same level of access to your chatbot system! Through authentication, you can verify that a user is really who they claim to be. It can be done through:

- Passwords

- Codes

- 2FA (Factor Authentication)

- Other secure methods

Furthermore, authorization also ensures that each person only has access to the parts of the system they actually need. This prevents misuse + protects sensitive information from being exposed to the wrong people.

6. Collect Only What You Need

Always remember that the more personal information you collect, the more risk you carry! Now, to reduce this risk, you should practice “data minimization”. In this technique, you only ask for the details that are truly necessary for your chatbot to work.

For example,

- Say your chatbot answers product questions.

- Now, it may not need your customer’s full address.

- By limiting what your chatbot can store, you can reduce your risk and achieve better AI compliance.

Additionally, several D2C companies and consumer brands earning $5M+ revenue always comply with laws such as GDPR or CCPA. The benefit? They achieve high AI security and protect customer privacy.

7. Check Systems Regularly

No system stays secure forever! As a VP or director of a D2C company, you must realize that new security threats appear constantly, and software can develop weaknesses over time. That is why you must do regular audits and make timely updates. Let’s understand the meaning of both:

| Audit | Updates |

| It is a review of your chatbot’s security setup to find gaps/ lapses and then eliminate them. | It ensures that any known problems are patched quickly. |

The advantage? The ongoing process of audit + update ensures that your chatbot defends strongly against hackers. This significantly reduces the chances of a breach.

AI + Human is the Best Customer Support Combo! Achieve it Today with Atidiv.

The average cost of an AI-related data breach has reached a record high in 2025. Such privacy incidents now cause serious financial losses and legal trouble for organizations.

The government response? Around the world, regulatory penalties linked to chatbot privacy failures are increasing. Global laws like GDPR, CCPA, and industry standards such as HIPAA, LGPD, and SOC 2 now require strict data protection and transparency in AI operations.

Thus, as a business owner, to strengthen AI security in your business, you should:

- Build security into AI systems from the design stage

- Collect only the data you truly need

- Monitor and audit your systems regularly

- Train employees to handle data safely

- Follow ethical and transparent AI practices

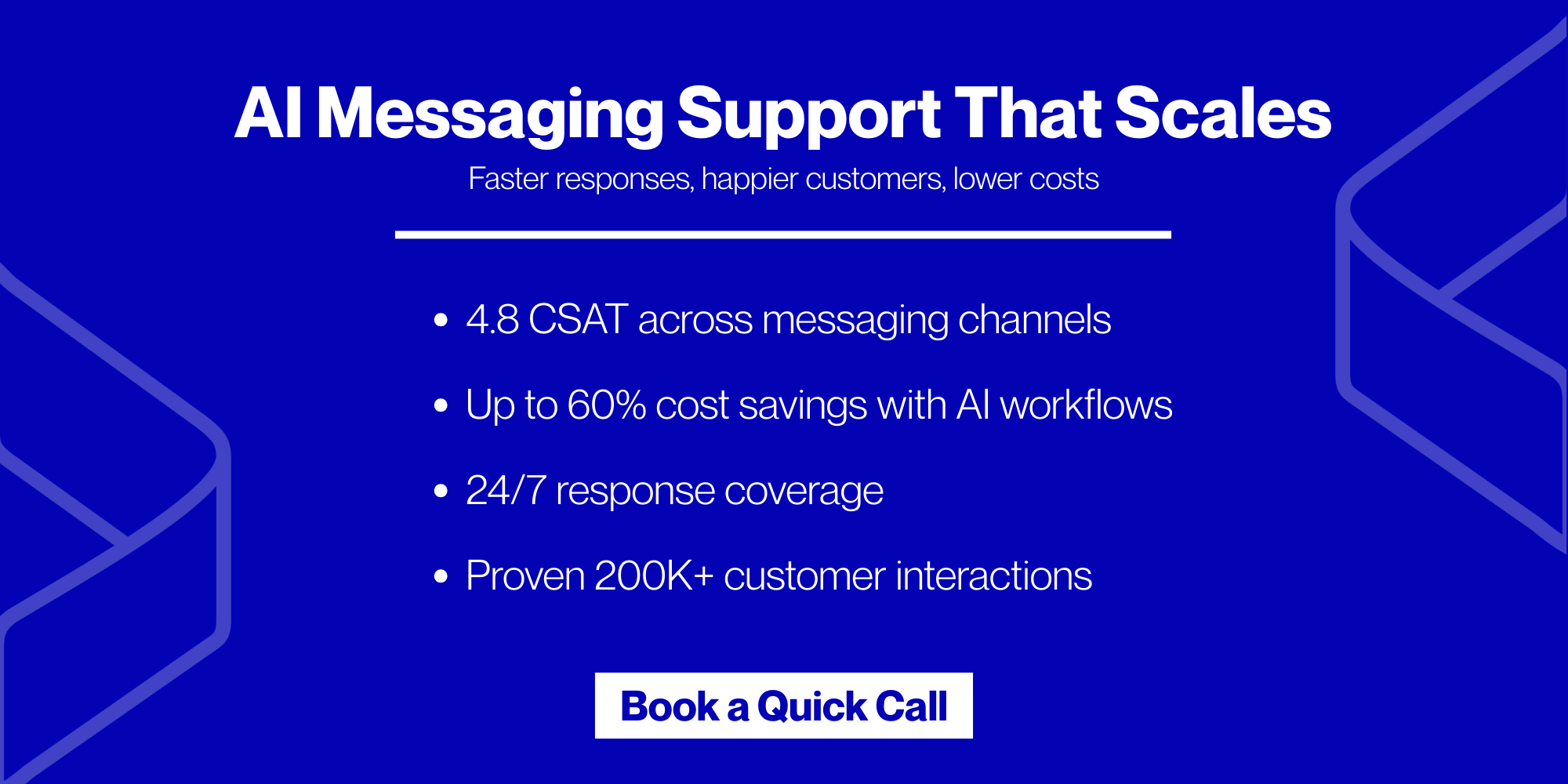

Now, if we talk about the AI benefits, it is true that AI improves productivity and accuracy! But want to know about the most powerful setup? It is AI + human expertise. And that’s what Atidiv delivers. With 15 years of experience and 70+ global clients, we work across 20+ industries.

Outsource your customer support to Atidiv today and cut costs by up to 60%. Learn how through this free consultation call.

AI Security FAQs

1. How can AI security be compromised by my employees?

A popular study found that about 15% of employees admit to pasting sensitive information into public chatbots. Now, this information can stay in the system and be exposed later. This creates serious privacy + security risks.

2. How can I make sure my chatbot follows privacy laws like GDPR or CCPA?

You must be 100% transparent about what data your chatbot collects and how it’s used. Next, to achieve strong AI compliance, you should:

- Always get user consent before collecting personal details

- Store information securely

- Allow users to delete their data when requested

- Perform regular compliance checks to stay within legal limits.

3. How costly can non-compliance with data privacy laws be in 2025?

Failing to follow global privacy rules like GDPR can lead to heavy penalties. Studies show that non-compliance can result in fines reaching €20 million or 4% of global revenue for the affected companies.

Beyond fines, it can also damage your brand reputation and reduce customer trust permanently.

4. What steps can prevent an AI-related data breach in 2025?

In 2025, threats are more advanced. So, to combat them, you should:

- Do encryption

- Restrict access with authentication

- Timely review of your chatbot activity

- Update your software on time

Also, train your team regularly to avoid mistakes that could lead to unintentional data exposure.

5. Can AI security really be managed without large budgets or big teams?

Yes! As a VP or director of a D2C company earning $5M+ revenue, you can start with simple and low-cost measures, such as:

- Regular software updates

- Secure passwords

- Data encryption

- Staff awareness programs

Additionally, you can consider partnering with digital customer experience solution providers like Atidiv. Such an association can strengthen your AI security and also keep expenses under control.